Gradient Descent - The deep Learning Entry point

Lets bring all our attention here , Gradient descent one of the most popular and most used optimization technique in Machine learning and deep learning.

Gradient descent's job is to optimize the given equation , so what might be the equation ?

It might be any equation a linear equation ( y = mx + b ) , a multi variate Linear equation , a polynomial equation etc any equation you can think of.

So how does it optimize ?

Before answering this question lets recall our Linear regression , how we optimized our linear regression model ? How we find the best values of m and b, that best fits the data ? you remember, yes we used Least square method ( Minimizing the Sum of squares method ) and for that we had derived a formula,

In this article , I will explain gradient descent being on top of Linea regression as it would be easy to teach and understand,

Gradient descent's job is also to give us the best values of m and b , as our previous linear regression model did but using different approach,

so you might be thinking , if it does the same , why the hell we using gradient descent ? can't we sue the same method we used earlier ?

Yes , for some simple equations we can use but when we go into deep learning , our equations are not linear or polynomial they are just too vast and complex , for solving those equations ( by solving equation I mean to find the best value of parameters of those equation ) we have to use optimization algorithm and gradient descent is one of them.

As the name speaks , gradient ( that is slope of the tangent line ) , descent ( the minimum point ) , we come to conclusion here that , we find the minimum value using slope of the tangent line , so just hearing the term tangent and slope , people from math backgroud should think od ferivatives , what derivatives gives ? it just gives the slope of the tanget line drawn at particular point.

To understand How gradien descent works, we need to visualize it in our mind.

Lets take an example

You are at the top of Mountain , and blind folded and your task is to come at the bottom of the mountain , so how would you start ?

first of all you find the direction , which direction to move , top or down , and you move in that direction in a certain footsteps , lts say you took 10 steps , then again you estimate your direction left or right ? left path might lead you up again or may be down , thats up to you which you choose, ad finally if are too good m you might come to buttom after many tries.

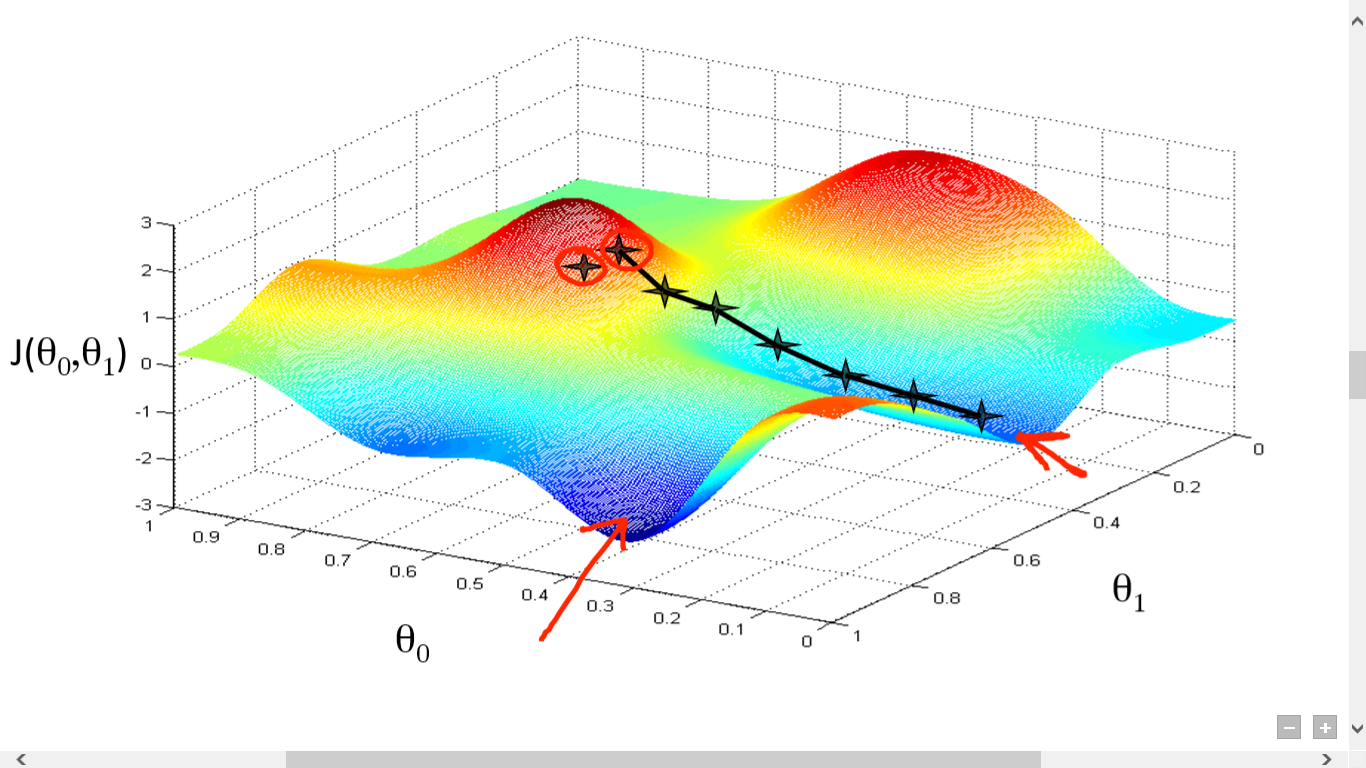

Thats exactly how gradient descent works , fig below shows how you are going from top of mountain to the buttom ,

your first take is to find the direction , in which direction to move , for that what you do is find the partial derivative at that point , which gives the slope of tangent line in that point, and that slope determines the direction of your movement , if slope is -ve , good you are in right direction ,, if is =ve , no you are not descending rather you are ascending and another parameter you need to implicitly determine is how much to move , 2 steps ,10 steps ,100 steps ? that is implicit upto you , thats what we call learning rate (hyper parameter )

The pic below shows how gradient descent works iteratively

I know its kind of hard to grasp the concept at first glance but you can be familiar wit it if you try on your own , visualizing it and looking into math

So , now lets derive , we would derive for your Linear equation , we wanna minimze our mean sum of square error , and we want to minimize for the parameters m and b, so we take partial derivative on m and b and iteratively update the values of m and b until we find our optimal solution.

here Alhpa(learning rate ) is called hyper parameter ,which you can choose, ideally it should not be very big or very less,

large value of Learning rate may lead to jump the local minima point and function may never converge

small value of learning rate may lead to very very slow converge of the function.

I know its kind of hard for me to explain in text here , If people do not get it and want more explanation i can make video more preferably in Nepali language.

Comments

Post a Comment